12.1 Introduction

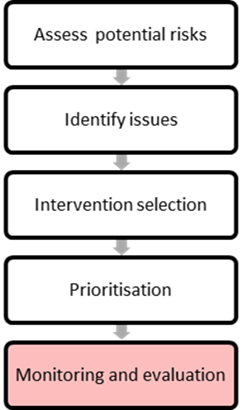

The final step of the risk assessment process is the monitoring, analysis and evaluation of interventions (Figure 12.1).

As described in Chapter 3. The Road Safety Management System, monitoring, analysis and evaluation is the ‘systematic and ongoing measurement of road safety outputs and outcomes (intermediate and final), and the evaluation of interventions to achieve the desired focus on results’ (GRSF, 2009). These tasks are often overlooked but are essential for the effective management of road safety.

Earlier chapters have provided information on the role of monitoring, analysis and evaluation in road safety management, including its importance as part of road safety targets and programmes (Chapter 3. The Road Safety Management System, and to a lesser extent, Chapter 6. Road Safety Targets, Investment Strategies Plans and Projects and Chapter 8. Design for Road User Characteristics and Compliance); and the role it plays in data requirements (Section 5.2 Identifying Data Requirements). This chapter concentrates on the evaluation process at the network and project level and includes information on the importance of this process as well as how to undertake monitoring and evaluation.

Monitoring refers to the systematic collection of data regarding the performance of a road safety programme or intervention during or after its implementation. Analysis involves the study of data to interpret it and its parts, such as determining the contributing factors to crashes. Evaluation involves the study of the results of the analysis of this data to determine the effect of the treatment or programme.

The purpose of the monitoring and evaluation process is to:

- Identify and measure any changes that have occurred in crash frequency or severity and determine whether the objectives of the safety programme or project have been reached.

- Identify any unwanted or unexpected effects that have occurred as a result of the implementation.

- Establish the public’s stance on the new implementation and whether any concerns have been raised.

- Evaluate whether the intervention has had an impact on intermediate measures (such as traffic distributions or speeds).

Performance indicators (KPIs or SPIs) can play a critical role in implementing the Safe System approach to road safety. They delve into the causes of crashes and help track progress, enabling process-oriented interventions that address various road-safety issues and reduce road users’ risk of death or serious injury.

Developing performance indicators involves identifying and prioritising policy areas, selecting relevant indicators, continuously monitoring and evaluating progress, and aligning selected indicators with national road safety strategies. Regular monitoring, realistic target setting, and the flexibility to update and introduce new indicators are crucial.

A successful evaluation process requires careful planning at the outset, which includes collecting baseline data, identifying the aims before implementation, and considering the different methods of analysis that could be used for the evaluation. It is also essential that the results and feedback of the evaluation study are properly circulated amongst stakeholders and other interested agencies.

CASE STUDY – Using Safety Performance Indicators to Improve Road Safety - The case of Korea

Over the last decade, Korea has reduced road fatalities by roughly 50%. Various Korean ministries and agencies are actively working to improve road safety. They have set ambitious targets to reduce road fatalities further, focusing on vulnerable groups.

This report emphasises the importance of using safety performance indicators to complement traditional statistics and current road safety strategies for a more comprehensive understanding of road safety. While the report identifies indicators that may be appropriate in the Korean context, its findings will be relevant for other countries looking to deploy safety performance indicators in the future.

The report’s findings emphasise the critical role of adequate road safety data and standardised methodologies in developing safety performance indicators for road safety management. Specifically, any data used in developing and maintaining indicators should be available, accessible, trustworthy, comparable, stable, simple, and aligned with safety objectives.

Road-safety policies and programmes should have mechanisms for collecting evidence to feed into the policy formulation process. Long-term thinking is also encouraged, with the need to set ambitious but feasible goals. Integrating performance indicators into national road safety strategies and periodically reviewing and redefining these strategies is essential for long-term and sustained road safety improvements.

Read More: PDF

There are three main types of evaluation. One or more may be appropriate for a study, and this is dependent on the aims of what needs to be evaluated. The three main types of evaluation are:

Process or ‘formative’ evaluation: assesses whether the programme or project was carried out as planned. This will help to identify strengths and weaknesses of an implementation process, and how it can be improved for future usage.

Outcome evaluation: assesses the degree to which the programme has created change and whether the programme has met its objectives. If the programme has not met its objectives, were there negative or no impacts recorded or observed?

Impact evaluation: is a form of an outcome evaluation and assesses whether the intervention (whether a single treatment or a integrated program) has brought about the intended change. This type of assessment is typically more scientifically rigorous and commonly looks at cause and effect relationships. This form of evaluation should consider aspects beyond the aim of the treatment or programme, and also consider any potential negative or unexpected impacts that may have occurred as a result of implementation. This can highlight changes that may be required in the design of a treatment, in the target audience selection, or in the delivery method of a programme.

Quantitative studies produce more rigorous outputs through the use of controlled trials or before-and-after studies.

Both qualitative and quantitative methods can be used for an evaluation study. Qualitative questions are more useful in process and outcome evaluations in the form of focus groups, or open-answer questionnaires, and can be insightful as to why an intervention may not have been successful.

As identified in Section 11.4 Priority Ranking Methods and Economic Assessment, information on intervention effectiveness is also important in assessing the likely benefits of safety improvements. The evaluation process is important for improving knowledge regarding the effectiveness of different road safety interventions in different types of environments. However, agencies must be cautious about generalising the results of an evaluation. The use of such information in the selection and prioritisation of interventions is discussed in Chapter 11. Intervention Selection and Prioritisation, while this chapter concentrates on methods for monitoring and evaluation.

How do I get started?

Processes should be established for the collection of relevant data. For those just getting started, this collection could be undertaken as part of a corridor or area demonstration project.

Staff and financial resources need to be provided for monitoring, analysis and evaluation, and key road agency staff and stakeholders should be trained in basic analysis and evaluation methods.

Consideration should be given at the outset to the storage and maintenance of the data over time and how it will be used.

Establish how success will be measured and what information is needed at the outset.